GitHub Repository Link

Overview

To develop my skills in deep learning and computer vision, I trained a Convolutional Neural Network to classify eight common traffic signs: Stop, Yield, Do Not Enter, No U-Turn, No Left Turn, No Right Turn, One Way (Left), and One Way (Right).

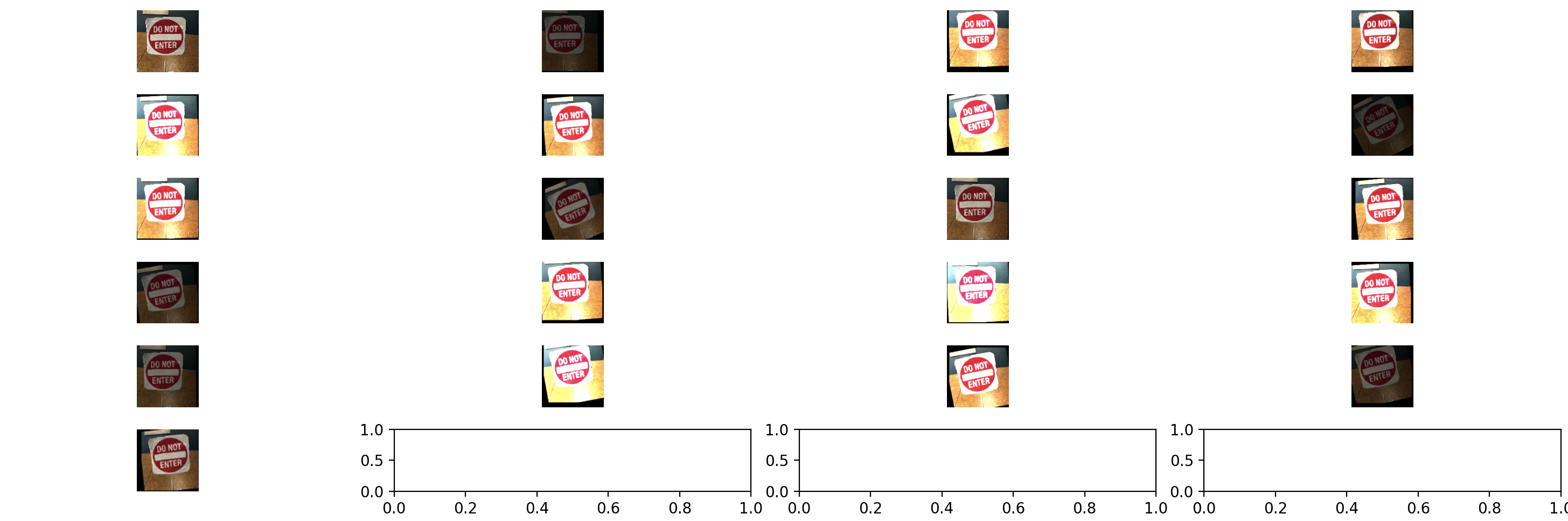

First, I custom-built a dataset by creating miniature, freestanding traffic signs, and took dozens of pictures of each sign. I then applied OpenCV transformations to each image to artificially enlarge and augment the dataset.

python

# augmentImages function enlarges the dataset by augmenting an image to n number of images.

def augmentImage(img):

# Rotation

# Set random rotation angle that follows a normal distribution

center = (WIDTH / 2, HEIGHT / 2)

angle = (int)(np.random.normal(0,10)) # large standard deviation of 10 degrees

if angle < 0:

angle = 360 + angle # Convert negative angle to positive angle

rot_matrix = cv2.getRotationMatrix2D(center,angle,1.0)

img = cv2.warpAffine(img, rot_matrix,(WIDTH,HEIGHT))

# Translation -- shift amounts follow normal distribution

shift_x = np.random.normal(0,5) # ~68% of values will fall between -5 and 5

shift_y = np.random.normal(0,5) # ~95% of values will fall between -10 and 10

M = np.float32([[1, 0, shift_x], [0, 1, shift_y]]) # transformation matrix

img = cv2.warpAffine(img, M, (img.shape[1], img.shape[0]))

# Contrast Adjustment

contrast_factor = np.random.normal(1.5,.5)

img = cv2.convertScaleAbs(img, alpha=contrast_factor, beta=0)

# Brightness Adjustment

brightness_factor = np.random.normal(1.5,.4)

img = cv2.convertScaleAbs(img, alpha=brightness_factor, beta=0)

return img

Here's a visualization of the function on an image (top left image):

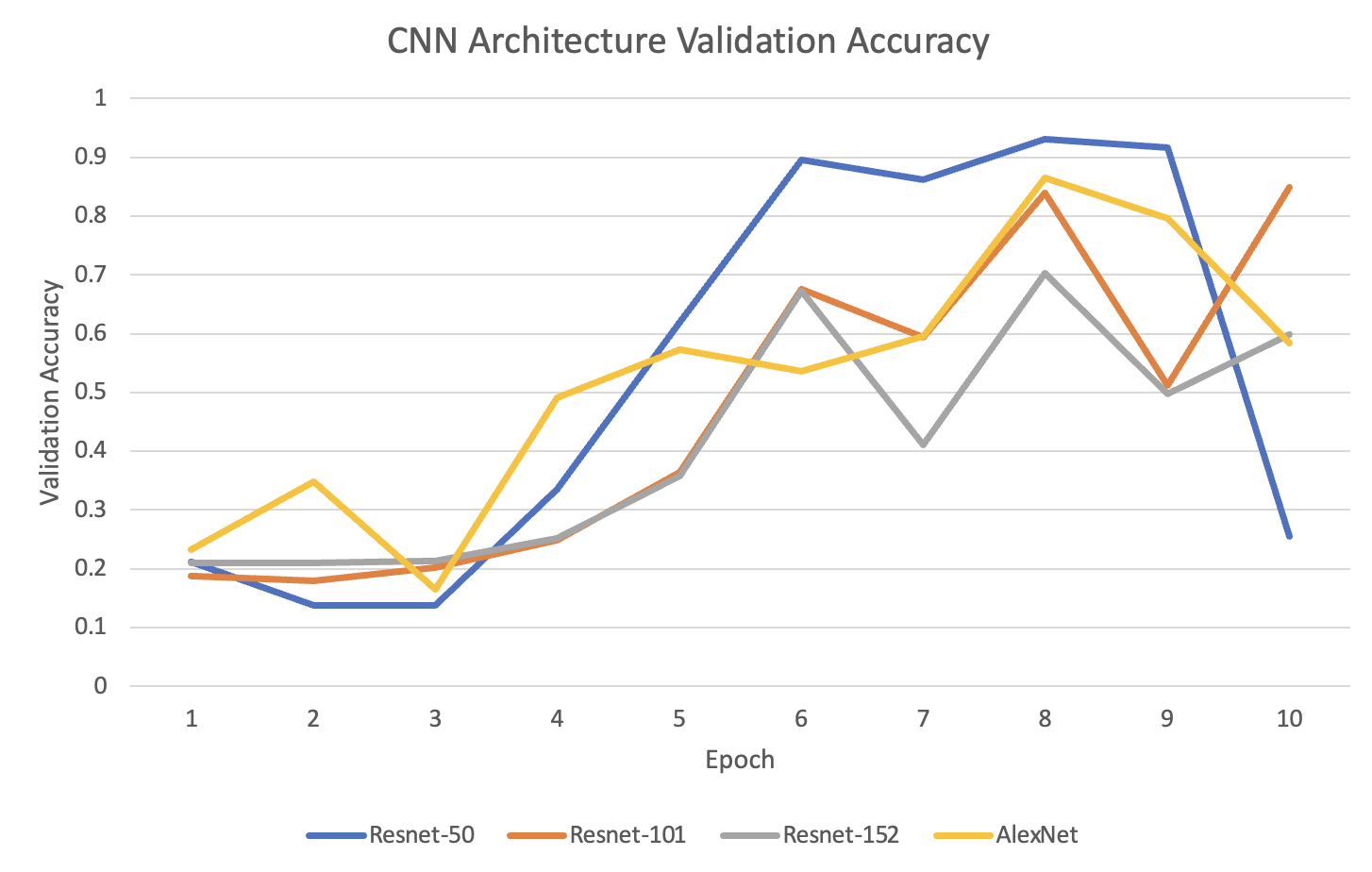

I used the ResNet-50 Neural Network architecture to train the dataset. I chose this architecture experimentally, as it yielded the best accuracies of all the architectures that I tested over numerous runs.

During the initial stages of training, I only achieved accuracies that were at best 60-80% on the validation set. After experimenting with numerous architectures and tuning the hyperparameters, I achieved a 94.04% accuracy on the validation set and a 95.24% accuracy on the testing set.

Here's the code that trains the DL model. It divides the shuffled dataset into training, validation, and testing sets, then it trains the model using the ResNet-50 architecture and stops the training if an epoch exceeds 90% validation accuracy.

python

# Divide into X_train, y_train, X_val, y_val for training and validation sets

# images are X; labels are y

TRAIN_SIZE = .8 # the percentage of the list that is used for training. Rounded to the nearest integer

VAL_SIZE = .15 # percentage of validation test set. rounded to the nearest integer

train_size = (int)((len(images) + .5) * TRAIN_SIZE)

val_size = (int)((len(images) + .5) * VAL_SIZE)

# Split data into training, validation, and test sets

X_train = images[:train_size]

y_train = labels[:train_size]

X_val = images[train_size:train_size+val_size]

y_val = labels[train_size:train_size+val_size]

X_test = images[train_size+val_size:]

y_test = labels[train_size+val_size:]

# Save test sets to pickle files to predict in predict.py

test_set = {

'X_test': X_test,

'y_test': y_test

}

with open("test_data.pkl", "wb") as f:

pickle.dump(test_set, f)

'''

CONVOLUTIONAL NEURAL NETWORK

'''

# Instantiate the ResNet-50 model

cnn = tf.keras.applications.resnet.ResNet50(include_top=True, weights=None, input_shape=(WIDTH, HEIGHT, 3), pooling='max', classes=8)

# Compile the model

cnn.compile(optimizer='adam', loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True), metrics=['accuracy'])

# Sourced from: https://towardsdatascience.com/a-practical-introduction-to-early-stopping-in-machine-learning-550ac88bc8fd

# Stop training cnn when val_accuracy achieves 90% accuracy

# Define the EarlyStopping callback

early_stopping = tf.keras.callbacks.EarlyStopping(monitor='val_accuracy', patience=10, mode='max', baseline=0.9, verbose=1)

# Fit the CNN to the training data

cnn.fit(X_train, y_train, epochs=10, batch_size=32, validation_data=(X_val, y_val), callbacks=[early_stopping])

# Evaluate the model

loss, accuracy = cnn.evaluate(X_val, y_val)